From 4 Seconds to 40ms: A Full-Stack Performance Odyssey

How we transformed a painfully slow 4-second page load into a blazing-fast 40ms experience through SQL views. A deep dive into three failed attempts and the full-stack architecture that finally delivered instant performance.

From 4 Seconds to 40ms: A Full-Stack Performance Odyssey

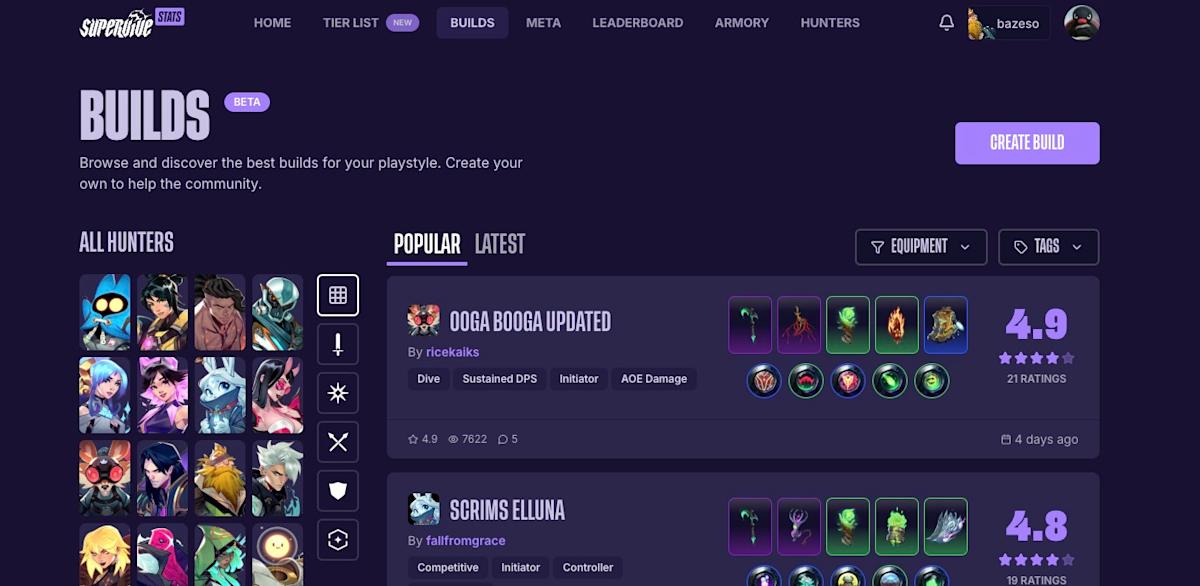

When we first launched the Builds page on supervive-stats.com/builds, it was painfully slow. A user's first view of our community-created builds took a staggering 4 seconds to load. For a core feature meant for browsing and discovery, this was an eternity.

This is the story of our journey to fix it. We'll cover our initial flawed attempts, a clever but ultimately failed intermediate solution, and the final full-stack architecture—from SQL views to Next.js hydration—that took our load times from an embarrassing 4,000ms to a blazing-fast sub-100ms.

A note on our approach: Throughout this entire journey, we consistently used server-side prefetching to improve initial load times. What evolved was our query strategy: starting with useInfiniteQuery, then moving to a simple useQuery, and finally returning to useInfiniteQuery with the right architecture to support it.

Note: As english is not my native language, this article has been redacted with the help of AI, especially for diagrams, phrasing and grammar.

The Starting Point: It Worked… Until It Didn't

In the beginning, everything was fine. With only a handful of builds, our database queries felt snappy. We were using PocketBase, and its expand feature was convenient, allowing us to fetch a build and all its related data—perks, abilities, user info, tags—in a single request. Sorting was handled on the client-side.

But as the number of builds grew towards 1,000, the cracks began to show. What once felt instant now involved a noticeable, frustrating delay. In the world of databases, 1,000 rows is tiny. This wasn't a scaling problem; it was an efficiency problem. Our convenient shortcuts had created two major bottlenecks: massive data payloads and inefficient sorting logic.

Phase 1: The Initial Implementation - An Infinite Mess

Our first version used TanStack Query's useInfiniteQuery hook with server-side prefetching, aiming for a seamless infinite scroll experience. However, it was built on two flawed assumptions.

First, we were fetching far too much data with a massive expand query in our PocketBase backend:

grip_1,grip_2,relic_1,relic_2,kicks,perk_1,perk_2,perk_3,perk_4,perk_5,abilities.ability,consumables,queues,hunter,user,tags

This resulted in a 3 MB JSON payload just for the initial view.

Second, and more catastrophically, our complex popularity algorithm—a hybrid of a Wilson score interval for votes and an engagement score for views and comments—was calculated entirely on the client-side.

This created a disastrous performance trap. To sort the builds correctly, the client needed data for all of them. As a result, every time fetchNextPage was called to load more items, we had to re-fetch the entire list of builds. The "infinite scroll" was an illusion that triggered a full 3 MB data load on every scroll. It was inefficient, expensive, and delivered a terrible user experience.

Phase 2: The Intermediate Fix - A Fast UI with a Fatal Flaw

Realizing the infinite query was untenable, we pivoted. We replaced useInfiniteQuery with a single useQuery that still used server-side prefetching but fetched all builds on the initial load. We then implemented a "fake" pagination system on the client, slicing the in-memory array of sorted builds to display pages.

The Good: Once the initial load was complete, the user experience was fantastic. Navigating between pages was instantaneous because all the data was already in memory. The UI was incredibly snappy.

The Bad: The First Contentful Paint (FCP) was now the entire problem. The user was stuck staring at a loading spinner for ~4 seconds while we downloaded and processed the massive dataset. We had traded a bad interaction experience for an abysmal initial load experience.

This phase taught us a critical lesson: Client-side sorting at scale is a losing battle. The real work had to move to the server.

Phase 3: The Final Architecture - A Holistic, Full-Stack Solution

Armed with this knowledge, we designed a new solution from the ground up, addressing the bottlenecks at every layer of the stack.

Part 1: Building a Fast Foundation (The Back-End)

-

Server-Side Calculation: First, we moved the entire hybrid popularity algorithm into a server-side CRON job. This job periodically pre-calculates the score for every build and stores it in a dedicated

builds_popularitytable. The client is no longer responsible for any heavy lifting. -

Eliminating the Back-Relation Bottleneck: With popularity pre-computed, we could now query for pages of sorted builds directly. However, our initial queries were still slow, taking 2-4 seconds. The culprit was a back-relation sort. We were asking the

buildstable to sort itself using a value from the separatebuilds_popularitytable.Even after precomputing popularity, we were still sorting on a back-relation:

-builds_popularity_via_build.popularityThis turned out to be a big performance sink. Why? Because PocketBase has to join across collections dynamically to resolve back-relations. That means every paginated request (

?page=1&perPage=10) still triggers heavy work under the hood — fetching, joining, and then sorting — which left us with 2–4 second response times for just 10 builds.In short: sorting on back-relations doesn't scale well.

Step 4: Flattening With a View Table

The solution was to remove the back-relation entirely by flattening popularity into the same row as the build. We created a SQL view that joins builds with builds_popularity:

CREATE VIEW builds_view_table AS SELECT b.id, b.name, -- (all other build columns) bp.popularity FROM builds b LEFT JOIN builds_popularity AS bp ON b.id = bp.build;Now the frontend just queries the

builds_view_tableand sorts with:-popularityNo back-relation, no heavy join cost, just fast queries. This change alone dropped our API response time for a page of results to well under 100ms.

(You might ask: why not just add a

popularitycolumn to thebuildstable? We use theupdatedtimestamp to show when an author last modified a build. If our CRON job constantly updated thebuildstable, that timestamp would become meaningless. The view provided the performance win without disrupting our existing schema logic.)

Part 2: Delivering an Instant Experience (The Front-End)

With a blazing-fast API, we could now architect the front-end to feel instantaneous.

-

Server-Side Rendering the First Page: We returned to

useInfiniteQuery, continuing our consistent use of server-side prefetching but now with a proper strategy. In our Next.js page component, we pre-fetch the very first page of builds on the server. This data is then passed to the client and used to hydrate the TanStack Query cache.// On the Next.js Page component (server-side) const queryClient = new QueryClient(); const query = buildsInfiniteQueryOptions(...options); // Prefetch the first page of data on the server await queryClient.prefetchInfiniteQuery(query); return ( <HydrationBoundary state={dehydrate(queryClient)}> <BuildsList {...props} /> </HydrationBoundary> );The result is that the user receives server-rendered HTML that already contains the first set of builds. The page is visible and meaningful immediately.

-

Seamless Client-Side Takeover and Lazy Loading: The

BuildsListcomponent on the client hydrates from this server-provided state, ensuring there's no flicker or re-fetching of visible data. Subsequent pages are now fetched efficiently withfetchNextPageas the user scrolls.// Inside the BuildsList component (client-side) const { data, fetchNextPage, hasNextPage, ... } = useInfiniteQuery(buildsInfiniteQueryOptions(...));Finally, we implemented one last optimization. The individual build cards themselves are now responsible for lazily fetching their detailed data (specific perks, items, etc.). Since many builds share the same items, TanStack Query's cache ensures that once an item is fetched for one card, it's instantly available from memory for any other card that needs it, minimizing network requests.

The Result: The Best of All Worlds

By moving through this iterative process, we landed on a solution that solved all our previous problems and delivered a superior user experience.

- API Response Time: From ~4,000ms to <100ms (often ~40ms).

- Payload Size: From ~3 MB for the initial view to a few kilobytes for the hydrated data.

- Perceived Performance: Near-instant load time thanks to SSR and hydration, fixing the FCP issue from our intermediate solution.

- Efficient Data Fetching: True, efficient API pagination, fixing the "re-fetch everything" nightmare of our initial approach.

This journey was a powerful lesson in full-stack development. A fast API is essential, but it's the intelligent synergy between a well-designed back-end and a smart front-end loading strategy that turns a fast API into a truly instantaneous user experience.

TL;DR

The Problem: A builds listing page that took 4 seconds to load due to massive data payloads (3 MB) and client-side sorting of complex popularity algorithms.

The Journey:

- Phase 1:

useInfiniteQuerywith server prefetching → Failed due to client-side sorting requiring full dataset re-fetches - Phase 2:

useQuerywith server prefetching → Fast navigation but terrible initial load (4s loading spinner) - Phase 3: Back to

useInfiniteQuerywith proper architecture → Success

Key Technical Solutions:

- Move computation server-side: Popularity algorithm → CRON job → dedicated table

- Eliminate back-relations: PocketBase back-relation sorts are slow → SQL view flattening data

- Smart prefetching: Server-side render first page → hydrate TanStack Query cache → seamless client takeover

- Lazy loading: Individual build cards fetch detailed data on-demand with shared caching

The Result: 4,000ms → 40ms load times, 3 MB → few KB payloads, instant perceived performance.

Key Lesson: Client-side sorting doesn't scale. The real performance wins come from intelligent backend design combined with strategic frontend hydration.